Earlier this year, ATEME made history by winning three Technology & Engineering Emmy® awards from The National Academy of Television Arts & Sciences (NATAS) for its achievements in video compression and encoding for media delivery and distribution. In this blog series, I will provide an overview of the technologies that were honored with this award and explain how they benefit our customers – starting with the award for the development of perceptual metrics for video compression.

Developing an objective metric for measuring video quality that closely mimics how the human visual system perceives quality had, for decades, been an elusive goal of the video-compression community. Several objective metrics such as the Peak Signal-to-Noise Ratio (PSNR) and Structure Similarity Index (SSIM) had been developed but, in many situations, they were poorly correlated to human perception of quality.

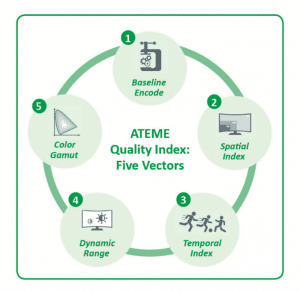

To respond to this need, our video scientists developed the ATEME Quality Index (AQI). The AQI is composed of five independent quality metrics as follows (and as shown in the figure below):

- The Encoding Quality, an objective metric that reflects raw encoder efficiency before other factors are taken into consideration.

- The Spatial Index, a vector that describes the expected quality loss incurred by resolution down-sampling in relation to viewing parameters (e.g., viewing distance) and content spatial features.

- The Temporal Index, a vector that leverages content motion analysis to describe the impact of framerate reduction, given certain viewing parameters.

- The Dynamic Range index, which calculates ratios between brightest and darkest parts of the picture, and the impact on perceived quality of tone-mapping HDR content to SDR formats.

- The Color Gamut Index, which describes how transformations relating to the content color characteristics will impact the perceived quality of the output.

ATEME has tuned the AQI against a large, proprietary database of tens of thousands of video-quality test encodings applicable across codecs and resolutions. Many of these were in fact conducted by highly skilled Golden Eye video engineers at pay-TV operators around the globe. Machine Learning (ML) techniques were used to train the AQI algorithms to match the outputs to the visual quality evaluations of the experts.

You may wonder what practical use such a perceptual quality metric may have. That takes us to the next Emmy award, pertaining to the use of artificial intelligence A(I) to optimize video compression. Stay tuned for our next blog article coming soon!